AI Models Struggle with Complex Scientific Reasoning Highlighting Key Limitations and Research Needs

AI models continue to improve but face significant hurdles in complex scientific reasoning tasks, exposing limitations that drive ongoing research to enhance accuracy, interpretability, and generalization.

Artificial intelligence has made tremendous strides across many fields, yet complex scientific reasoning remains a major frontier posing unique difficulties. Despite advances in natural language understanding and pattern recognition, today's AI models often fall short when tasked with deep scientific problem-solving or reasoning that requires integrating multiple concepts, contextual understanding, and precise logical inference.

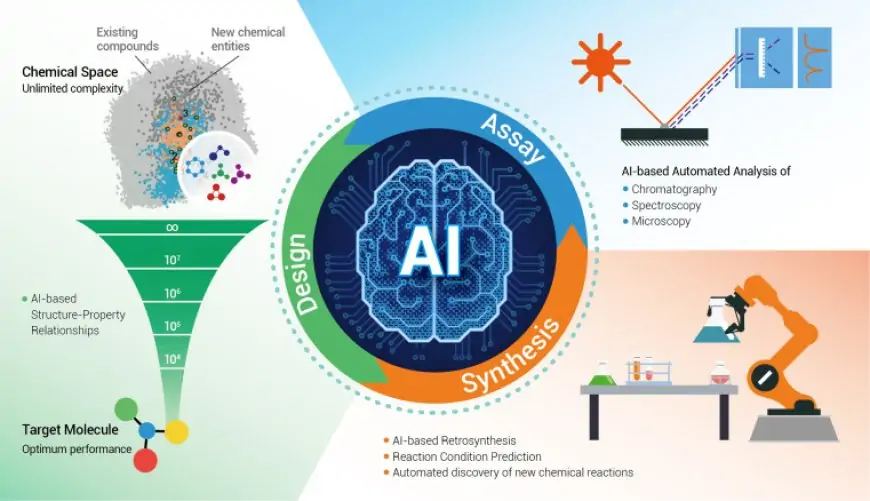

One major limitation lies in the models’ reliance on pattern matching rather than genuine comprehension. Many current AI architectures, such as large language models, generate plausible responses based on statistical correlations within their training data but struggle to apply foundational scientific principles or derive novel insights consistently. This leads to errors in domains where nuanced reasoning or theory-driven deduction is crucial, such as in physics, chemistry, or advanced biology.

Another issue is the challenge of generalization beyond training examples. Scientific reasoning often requires extrapolating from limited data or unfamiliar scenarios, a task where AI models exhibit brittleness. They may fail to handle unexpected variables or reasoning chains that differ from learned examples, limiting their utility in pioneering research or novel experimental design.

Interpretability remains an ongoing concern. Understanding how AI models arrive at their conclusions is essential in scientific contexts, where traceability and reproducibility underpin trust. Black-box approaches hinder validation by experts and slow adoption in critical research workflows. Enhancing model transparency alongside performance is an active research area aiming to bridge this gap.

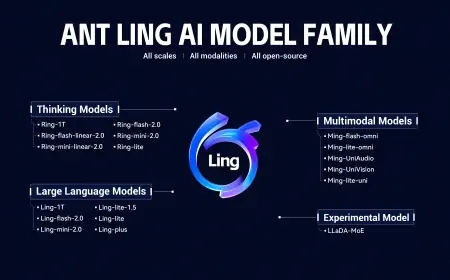

Efforts to address these limitations include hybrid systems combining symbolic reasoning with neural networks, improved training on scientific literature, and reinforced learning techniques encouraging logical consistency. Researchers are also exploring domain-specific models tailored to the unique demands and formal structures of scientific disciplines, versus generalized large-scale models alone.

The path forward requires cross-disciplinary collaboration integrating AI expertise with domain science to shape models that contribute reliably to scientific discovery. While challenges in complex scientific reasoning persist, continuous innovation is expanding the boundaries of what AI can achieve, promising tools that augment human ingenuity in tackling the toughest scientific questions.