Nvidia Strikes $20 Billion Strategic Deal with Groq to Solve the AI Inference Bottleneck

In a move that has sent shockwaves through Silicon Valley, Nvidia has finalized a $20 billion licensing and talent agreement with AI chip startup Groq, securing the industry’s most advanced inference technology and poaching its top leadership to maintain its crown in the AI race.

The Biggest Move in AI Hardware History?

Just when the tech world thought the holiday season would be quiet, Nvidia CEO Jensen Huang has delivered a seismic shift to the semiconductor landscape. In a strategic maneuver valued at approximately $20 billion, Nvidia has entered into a massive licensing agreement with Groq, the startup famous for its ultra-fast "Language Processing Units" (LPUs). This isn’t just a simple technology transfer; it’s a high-stakes "acquihire" that sees Groq’s founder and CEO, Jonathan Ross, and President Sunny Madra joining the green team at Nvidia.

The deal comes at a pivotal moment. While Nvidia has dominated the market for training massive AI models—commanding roughly 92% of the data center GPU market in 2024—the industry is rapidly shifting toward inference. Inference is the stage where a model actually generates an answer for a user, and it is here that Groq’s specialized architecture has consistently outperformed Nvidia’s general-purpose GPUs in terms of raw speed and energy efficiency.

Shutterstock

Why Nvidia is Paying a Premium for "Inference"

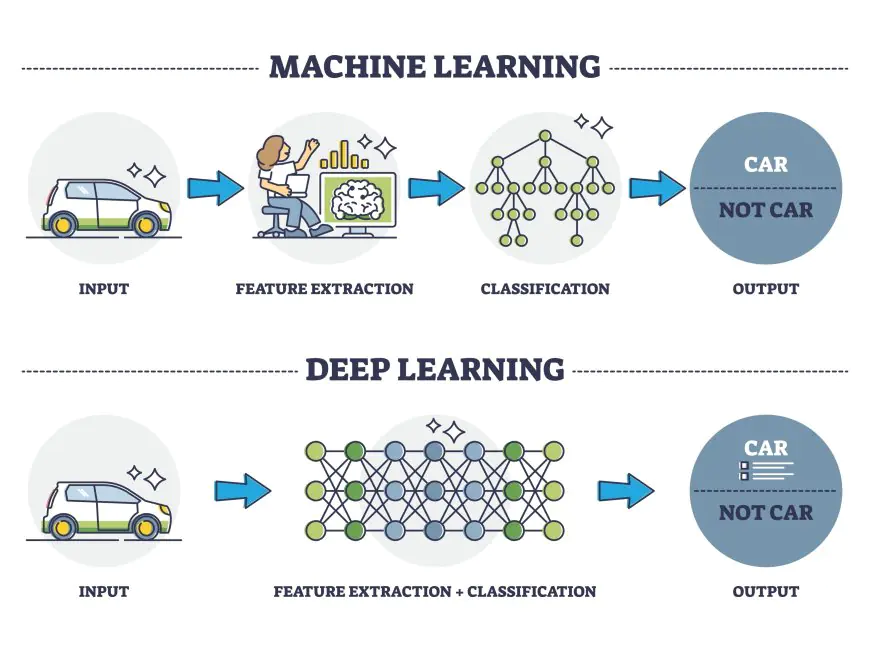

For the uninitiated, the distinction between training and inference is the difference between writing a textbook and reading it aloud. Training requires massive, brute-force computational power, which Nvidia’s H100 and Blackwell chips provide in spades. However, as AI applications like ChatGPT and Gemini scale to billions of users, the cost and speed of delivering responses (inference) become the ultimate bottlenecks.

Groq’s technology claims to process generative AI models up to 10 times faster while consuming significantly less power. By integrating this "LPU" logic into its future architecture, Nvidia isn't just buying a product; it’s plugging a hole in its armor. As reported by CNBC, this move allows Nvidia to offer a "full-stack" solution that is optimized for both the birth and the daily life of an AI model.

The Regulatory Chess Match

Perhaps the most fascinating aspect of this deal is its structure. Rather than a traditional acquisition—which would almost certainly trigger a year-long investigation from the FTC and European regulators—Nvidia has opted for a "non-exclusive licensing agreement." This follows a growing trend in the industry, similar to Microsoft’s deal with Inflection AI and Amazon’s partnership with Adept.

By licensing the technology and hiring the talent rather than buying the entire company, Nvidia effectively bypasses the most grueling antitrust hurdles. Groq will technically remain an independent entity under its new CEO, former CFO Simon Edwards, and will continue to operate its GroqCloud service. However, with the core R&D team now under Nvidia’s roof, the competitive threat Groq once posed as a standalone rival has been effectively neutralized.

A Talent Grab with Deep Roots

For Jensen Huang, hiring Jonathan Ross is a personal and strategic victory. Ross was a key architect behind Google’s original Tensor Processing Units (TPUs), the very chips that gave Google a rare hardware advantage over Nvidia years ago. Bringing Ross into the fold doesn't just improve Nvidia's hardware; it deprives rivals like Google and Amazon of a potential ally in the fight for inference supremacy.

What This Means for the Future

As we move into 2026, the battle for the "AI Factory" is entering a new phase. The global AI inference market is projected to reach over $255 billion by 2030, and with this deal, Nvidia has made it clear it doesn't intend to let any of that revenue slip away.

For enterprises, this could lead to more efficient, lower-latency AI tools. For the competition, it’s a wake-up call that Nvidia’s massive $60 billion cash pile is a weapon they are more than willing to use. You can find more details on the evolving infrastructure landscape in our recent analysis of AI market shifts.

The message from Santa Clara is loud and clear: Nvidia isn't just winning the training race; it’s positioning itself to own the conversation, one ultra-fast token at a time.