Luxonis Championing the Eyes, Ears, and Brains for Robotics and Automated Systems

Luxonis is revolutionizing the robotics industry with its "Eyes, Ears, and Brains" philosophy, integrating advanced spatial AI, multimodal sensing, and powerful edge compute into a single, standalone hardware ecosystem.

The Sensory Revolution in Robotics

For decades, the challenge of robotics has been one of fragmentation. Developers were forced to cobble together disparate sensors, external processors, and complex software stacks to give their machines even a rudimentary understanding of the physical world. Luxonis is fundamentally changing this paradigm. By championing a unified approach that combines the "eyes, ears, and brains" of a system into a single, sleek package, they are lowering the barrier to entry for sophisticated automation and pushing the boundaries of what edge AI can achieve.

The company’s mission is centered on the idea that for a robot to truly interact with its environment, it needs to perceive it with the same multi-sensory depth as a human. This isn't just about taking a picture; it's about understanding 3D space, hearing environmental cues, and processing all that data instantly without the latency of the cloud.

The Eyes: Beyond Standard Vision

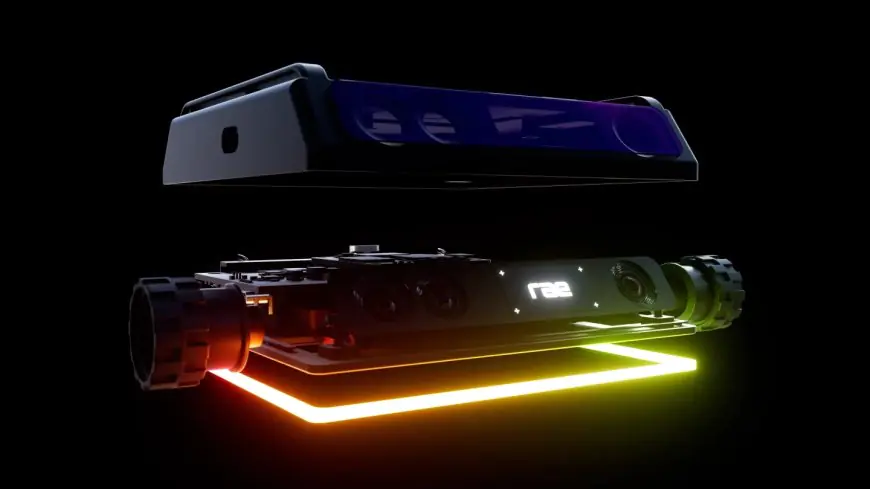

The "eyes" of the Luxonis ecosystem are represented by their legendary OAK (OpenCV AI Kit) series. The recently launched OAK 4 family takes this to an unprecedented level. These devices aren't just cameras; they are spatial AI powerhouses. Featuring a high-resolution 48MP RGB sensor (with support for up to 108MP) and a pair of stereo depth cameras, they allow machines to "see" in three dimensions with millimeter precision.

What sets Luxonis apart is the integration of Neural Stereo Depth. Unlike traditional depth cameras that often struggle with reflective surfaces or low-light conditions, Luxonis uses on-device deep learning models to "fill in the gaps," creating a dense, accurate map of the environment. This capability is critical for autonomous mobile robots (AMRs) navigating crowded warehouses or drones performing precision agricultural tasks.

The Ears and the Brain: Multimodal Intelligence

Vision is only half the story. Luxonis has expanded its definition of perception to include "ears"—integrated microphones and audio processing capabilities that allow robots to detect vocal commands, recognize industrial anomalies (like a failing bearing), or localize sound sources in a 360-degree space. When combined with a 9-axis IMU (Inertial Measurement Unit), the system gains a sense of balance and motion, completing the sensory suite.

The "brains" of the operation have seen a massive upgrade in the newest generation. The OAK 4 series is powered by the Qualcomm Snapdragon QCS8550, a high-performance system-on-chip that delivers a staggering 52 TOPS (Tera Operations Per Second) of AI compute. This is a 40-fold increase over previous generations, allowing the camera to run multiple complex neural networks—such as YOLOv8 for object detection and DINOv3 for visual transformers—entirely on the device. Because it runs a full Yocto Linux OS, it can function as a completely standalone computer, eliminating the need for a bulky host PC.

Building an Integrated Ecosystem

Hardware is only as good as the software that supports it. Luxonis has built a massive following—with over 4.5 million SDK downloads—by keeping their platform open and accessible. Their DepthAI API abstracts the complexity of hardware acceleration, making it possible for developers to deploy a vision pipeline in just a few lines of code.

Furthermore, the introduction of Luxonis Hub provides the final piece of the puzzle: fleet management. For companies deploying hundreds of robots across various sites, the ability to monitor model performance, push over-the-air updates, and collect "snaps" of edge cases for retraining is vital. This closed-loop system ensures that the "eyes, ears, and brains" of the robotics fleet are constantly learning and improving over time.

As we move deeper into 2026, the era of "dumb" sensors is ending. Luxonis is proving that the future of robotics lies in self-contained, intelligent perception systems that can see, hear, and think for themselves in the real world.