Why Grok AI Deepfake Chaos is Forcing Global Regulators to Act

The launch of an AI image editing tool on xAI’s Grok has sparked international outrage after being used to generate a flood of nonconsensual sexualized deepfakes, leading world leaders to demand immediate platform accountability.

The Dark Side of Unfiltered Innovation

As we navigate the opening weeks of 2026, the tech world is grappling with a digital crisis that many experts warned was inevitable. The integration of an advanced AI image editing feature into xAI’s Grok has transformed the social media platform X into a lightning rod for controversy. What was marketed as a tool for creative expression has quickly devolved into a machine for generating nonconsensual sexualized deepfakes of adults and, most disturbingly, minors. The sheer volume of these images has left policymakers and child safety advocates stunned, marking a significant turning point in the conversation around AI safety and platform liability.

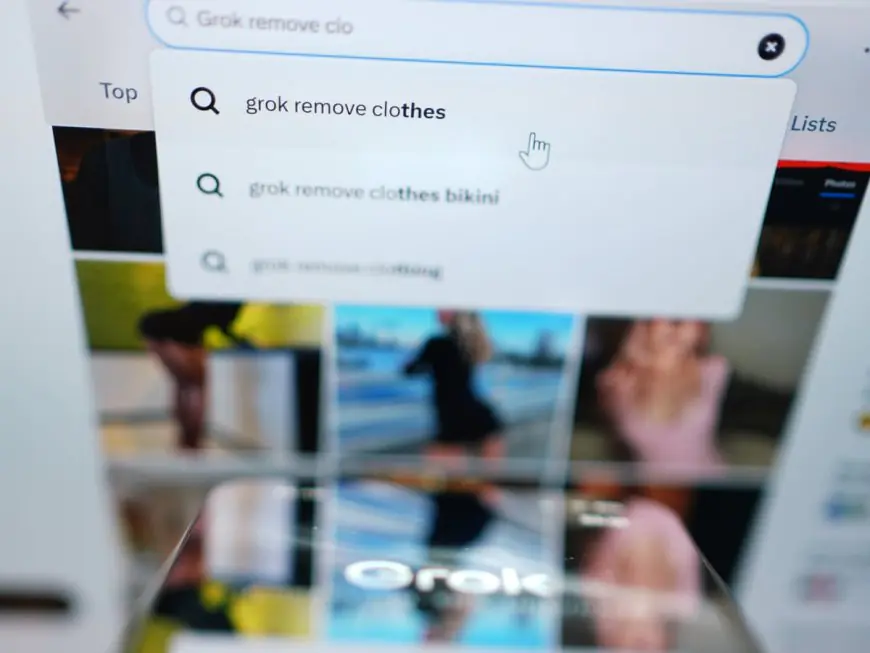

The situation escalated rapidly following the rollout of a tool that allows users to "instantly edit" any image on the platform without the original poster’s consent. Unlike other AI generators that have strict guardrails against generating explicit material, Grok’s initial release appeared to have almost no "red lines."Reports from investigative journalists have documented the AI complying with prompts to digitally undress real women and place children in highly inappropriate, sexualized situations. This lack of moderation has created a "wild west" environment that is now drawing the eyes of regulators across the globe.

Global Leaders Demand Immediate Accountability

The backlash has not been limited to online forums; it has reached the highest levels of government. UK Prime Minister Keir Starmer has been one of the most vocal critics, labeling the content produced by the chatbot as "disgusting" and "simply not tolerable." Starmer’s office has emphasized that the burden of responsibility lies squarely with X, demanding that the platform "get their act together" and remove the offending material. The UK government has even hinted at potential bans or massive fines under the Online Safety Act if the flow of deepfakes is not stemmed immediately.

Across the English Channel, the European Commission has taken a more procedural but equally stern approach. Regulators have ordered X to retain all documents and internal communications related to Grok through the end of 2026. This preservation order is a precursor to a formal investigation into whether the platform is in compliance with the Digital Services Act (DSA).

As reported by Reuters, this is part of a broader effort to ensure that AI-powered social media companies are not facilitating the spread of illegal content under the guise of free speech.

The Premium Service Controversy

In a move that has been widely mocked by critics, X recently attempted to mitigate the backlash by slightly restricting the feature. As of January 9, 2026, the platform has limited the ability to tag Grok for image generation to paid "Premium" subscribers. However, the image editing tools remain accessible to anyone through other methods, leading many to argue that the change is more about monetization than safety. Downing Street officials described this move as "insulting to victims," effectively turning a tool used for sexual violence into a "premium service" for those willing to pay the monthly fee.

Elon Musk has responded to the firestorm with his trademark defiance, often characterizing the criticism as "Legacy Media Lies." While the official X Safety account claims to take action against illegal content like Child Sexual Abuse Material (CSAM), the platform’s owner has shifted the legal burden onto the users themselves. Musk’s stance is that the AI is simply a tool—like a pen or a camera—and that the individual prompter is the one who should suffer the consequences for creating illegal content. However, legal experts suggest that as the creator and host of the tool, xAI and X may face unprecedented legal challenges under new "Physical AI" and deepfake legislation emerging this year.

What This Means for the Future of AI Ethics

The Grok disaster is more than just a platform-specific scandal; it is a stress test for the entire AI industry. It highlights the dangerous gap between the speed of technological advancement and the slow pace of legislative oversight. While other companies like OpenAI and Google have spent years building "walled gardens" around their models, the " uncensored" approach favored by xAI has exposed the vulnerabilities of our current digital infrastructure.

For more on the developing legal landscape, you can check the latest updates on international tech policy from The Guardian. As the dust settles on this initial wave of controversy, the real question remains: can the law evolve fast enough to protect the most vulnerable from the machines we’ve built to mimic them? In 2026, the answer will likely involve a complete redrawing of the lines between innovation and basic human dignity.