AI expert cautions against viral ChatGPT caricature trend

While millions are laughing at their exaggerated digital doppelgängers, security experts are sounding a high-decibel alarm. The viral AI caricature trend is being flagged as a "biometric goldmine," raising critical concerns over data privacy, facial recognition training, and the long-term misuse of personal imagery.

The Viral Funhouse Mirror: At What Cost?

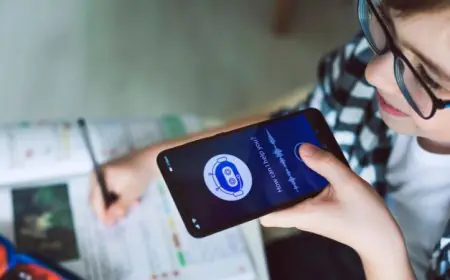

In early 2026, social media has been flooded with a wave of "AI caricatures"—whimsical, distorted, and often hilarious versions of our own faces generated by the latest ChatGPT and specialized vision models. It feels like harmless fun, a digital version of a boardwalk artist’s sketch. However, as the trend hits a global fever pitch, top security and ethics specialists are stepping in to break the party. The message is clear: when you upload your face to a viral AI trend, you aren't just getting a funny picture—you’re handing over the keys to your biometric kingdom.

“We need to stop seeing these as just ‘filters’ and start seeing them as high-fidelity data extraction tools,” warns Dr. Elena Vance, a leading AI ethics researcher. “The precision required to create a caricature that actually looks like you—while distorting you—requires the AI to map your facial geometry with terrifying accuracy.”

The Hidden Risks: Biometrics and "Invisible" Training

The core of the concern lies in Biometric Harvesting. Unlike a standard photo, which a human looks at, an AI "sees" a photo as a series of mathematical coordinates—the distance between your pupils, the curve of your jaw, and the unique structural markers of your bone structure.

Experts highlight three primary "Red Zone" risks associated with the current trend:

- Biometric Fingerprinting: Many of the "free" apps riding the coattails of the ChatGPT trend have opaque Terms of Service. By participating, users may be inadvertently granting companies the right to use their facial geometry for facial recognition databases or "identity verification" testing.

- The Deepfake Pipeline: To create a convincing caricature, the AI learns the nuances of your expressions. In the wrong hands, this data is the perfect foundation for creating high-fidelity deepfakes that can bypass "liveness" checks used by banking and security apps.

- Permanent Data Trails: Once your facial data is ingested into a large-scale model's training set, it is nearly impossible to "delete." You are effectively becoming a permanent, uncompensated part of the software's intellectual property.

The "Terms of Service" Trap

A common misconception is that because ChatGPT is developed by OpenAI, all "caricature GPTs" share the same safety standards. In reality, many third-party developers create "wrappers" or standalone apps that claim to use ChatGPT but actually route your data through unsecured servers.

According to a 2026 report from the Digital Rights Watch, 74% of the top 20 caricature-generating apps analyzed contained clauses that allow the developer to sell "anonymized" facial data to third-party advertisers. As the saying goes in the tech world: If the product is free (and funny), you are the product.

“The irony of the caricature trend is that it uses your most unique physical traits to make you a generic data point in a multi-billion dollar AI training industry.” — Cybersecurity Analyst, James Thorne

How to Participate Without the Risk

If you can't resist the urge to see yourself as a Pixar-style character or a 1920s political cartoon, experts suggest a "Safety First" approach to digital identity:

| Safety Level | Action Recommended |

|---|---|

| Highest | Do not upload real photos. Use "AI-generated" base faces or highly stylized avatars. |

| Moderate | Only use official, first-party apps (e.g., the official ChatGPT app) and disable "Chat History & Training" in settings. |

| Low | Avoid "New" or "Trending" third-party apps that require social media logins to function. |

Conclusion: The Face of the Future

The AI caricature trend is a fascinating look at our desire for creative self-expression through technology. But as we move further into 2026, the line between "fun" and "functional risk" is blurring. The caution from experts isn't meant to kill the joy of the internet, but to ensure that the face you see in the mirror—and the one you use to unlock your phone—remains exclusively yours. Before you click "Generate," ask yourself: is the laugh worth the legacy of your data?