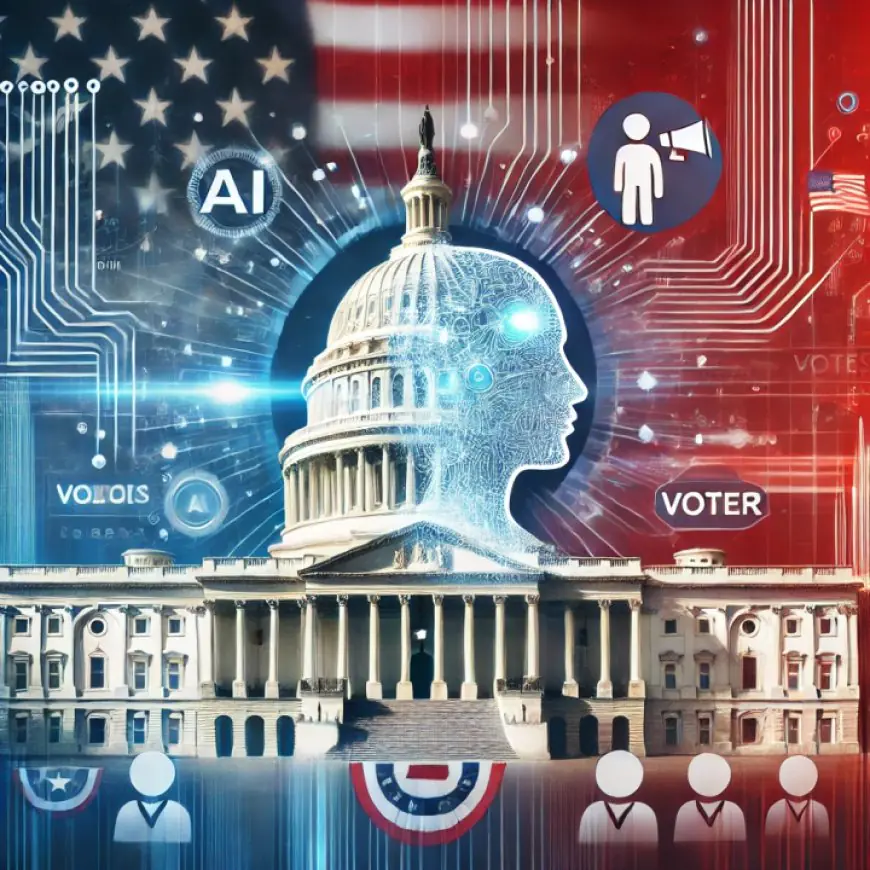

How AI in Political Advertising Is Reshaping Elections and Sparking a Regulatory Firestorm

The 2026 election cycle is witnessing an unprecedented surge in AI-generated political content, from lifelike deepfakes to hyper-personalized voter targeting. As candidates experiment with these powerful tools, a massive debate over ethics, transparency, and federal regulation is dividing lawmakers and tech giants alike.

The New Frontier of Political Persuasion

As the United States hurtles toward another high-stakes election season in 2026, the digital battlefield has undergone a radical transformation. Artificial intelligence is no longer just a buzzword in Silicon Valley; it has become the centerpiece of political strategy. From "synthetic" candidates who never actually spoke the words in their commercials to AI-driven microtargeting that can predict a voter’s concerns with unsettling accuracy, the technology is moving faster than the laws meant to govern it.

Recent campaign cycles have seen a flurry of controversial ads. In one instance, a gubernatorial candidate used AI to simulate a rival's voice in a radio spot, claiming it was a "what if" scenario. While the campaign defended it as a modern form of political satire, critics labeled it a dangerous deepfake designed to deceive the uninformed. This tension between innovation and integrity has sparked a nationwide backlash, prompting voters and advocacy groups to demand clearer boundaries.

The Regulatory Vacuum and the FEC Stance

Despite the growing outcry, federal oversight remains a patchwork of interpretations. The Federal Election Commission (FEC) has largely maintained a "technology-neutral" stance. Rather than drafting entirely new rules for AI, the commission has clarified that existing laws against fraudulent misrepresentation apply to AI-generated content. However, this case-by-case approach has left many wondering if it is enough to stop a flood of misinformation.

Currently, the FEC's position is that if a candidate uses AI to falsely represent that they are speaking on behalf of another candidate to solicit money or damage their opponent, it is illegal. But what about ads that simply use "style transfer" or "deepfake" imagery for rhetorical effect? That is where the legal gray zone begins. Recent interpretive rulings have confirmed that while the technology is new, the standard of fraud remains the same—leaving a significant burden on tech platforms and fact-checkers to police the nuances of "deception."

State Legislatures Step Into the Gap

With Washington D.C. moving slowly, state governments have become the primary laboratories for AI regulation. At least 26 states have now enacted laws specifically targeting deepfakes in elections. California and Minnesota, for example, have passed legislation requiring clear disclaimers on any political ad that uses generative AI to significantly alter an individual's appearance or speech.

However, these state-level efforts are facing fierce legal challenges. Federal courts have already struck down portions of some state laws, citing First Amendment concerns. Judges are wary of "censorship" and the "chilling effect" that broad bans could have on legitimate political speech and satire. This has created a confusing landscape for national campaigns that must now navigate different rules in every zip code they target.

Ethics vs. Efficacy: Can We Trust the Ballot?

The ethical debate centers on the concept of informed consent. Should a voter be told if the person speaking to them in a YouTube ad is a digital ghost? Organizations like the American Association of Political Consultants have condemned the use of deceptive generative AI, yet the temptation for campaigns to use these tools is immense. AI can produce content at a fraction of the cost of traditional film crews, allowing underfunded "dark horse" candidates to compete with established political machines.

As we look toward the future of democracy, the question isn't whether AI will be used—it’s how we will verify the truth. Experts suggest that the answer may lie in "watermarking" technology and blockchain-based authentication, which would allow users to trace the origin of a video back to its source. Until those systems are universal, the burden remains on the voter to maintain a healthy dose of skepticism.

Looking Ahead

The 2026 midterms are proving to be the ultimate stress test for the American electoral system. As the line between reality and simulation blurs, the demand for federal transparency laws is only growing louder. Whether the US can reach a consensus on AI ethics before the next major vote remains to be seen.