Why AI Chatbots Are Being Flagged as a Hidden Danger for Medical Advice

A groundbreaking study reveals that while AI chatbots ace medical exams, they fail spectacularly in real-world diagnostics. With accuracy rates dropping to as low as 34% for actual patients, experts warn of "dangerous" errors, including fatal misdiagnoses, highlighting the critical gap between artificial intelligence and human medical care.

The Illusion of Competence

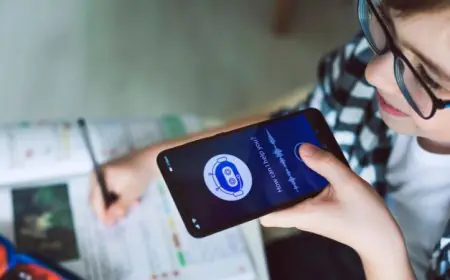

For the past year, the tech world has been buzzing with headlines about artificial intelligence passing medical licensing exams with flying colors. It painted a futuristic picture where a doctor was just a prompt away. However, a startling new study from the University of Oxford has shattered this illusion, flagging AI chatbots as not just unreliable, but potentially "dangerous" when placed in the hands of everyday patients.

The research, published in Nature Medicine, highlights a critical distinction that has been largely overlooked: the difference between an AI taking a test and an AI treating a human. While models like GPT-4o and Llama 3 can score above 95% on standardized medical benchmarks, their performance plummets when interacting with real people who may not know how to describe their symptoms perfectly.

The Numbers: A Stark Reality Check

The study’s findings are a sobering wake-up call for anyone relying on "Dr. Chatbot." When tested with roughly 1,300 participants, the accuracy of these AI systems in identifying relevant medical conditions dropped precipitously. In real-world scenarios, the AI identified the correct condition in fewer than 34.5% of cases.

This massive gap exists because of what researchers call a "communication breakdown." Unlike a trained physician who knows how to ask probing questions to uncover hidden details, chatbots tend to take user input at face value. If a patient omits a crucial detail, the AI rarely digs deeper, leading to confident but completely wrong diagnoses.

Life-Threatening Errors

The stakes of these errors are incredibly high. The study documented instances where the advice given could have led to fatal outcomes. In one chilling example involving symptoms of a subarachnoid hemorrhage (a life-threatening brain bleed), two different users received opposite advice:

- One user was correctly advised to seek emergency care.

- The other was told to simply "lie down in a dark room"—a recommendation that could have resulted in death.

Dr. Rebecca Payne, a General Practitioner and the study’s lead medical practitioner, didn’t mince words regarding the findings. "Patients need to be aware that asking a large language model about their symptoms can be dangerous," she stated, emphasizing that these tools often fail to recognize when urgent help is needed.

The "Trained User" Fallacy

Part of the problem lies in the assumption that users know how to prompt an AI effectively. The study revealed that everyday people often struggle to distinguish between good and bad advice provided by these models. The chatbots frequently offered a "mix of good and bad information," leaving the user to guess which parts were accurate.

This creates a false sense of security. Because the AI speaks with authoritative, grammatically perfect confidence, users are lulled into trusting its judgment. A separate survey by the Canadian Medical Association echoed these concerns, finding that patients who followed AI health advice were five times more likely to report experiencing an adverse reaction compared to those who didn't.

Moving Forward with Caution

This doesn't mean AI has no place in medicine. The technology still holds immense promise for administrative tasks, summarizing notes, or acting as a support tool for trained professionals. However, as a direct-to-consumer diagnostic tool, it is currently failing the safety test.

For now, the advice from the medical community is clear: use AI to draft an email or plan a trip, but when it comes to your health, keep the conversation between you and a qualified human professional. The risks of a "hallucinated" diagnosis are simply too high to ignore.