OpenAI Anthropic Roll Out Underage Detection Amid Mounting Scrutiny

OpenAI and Anthropic deploy AI safety tools to flag underage users sparking questions on detection accuracy privacy tradeoffs and enforcement amid rising legislative pressure on child safety.

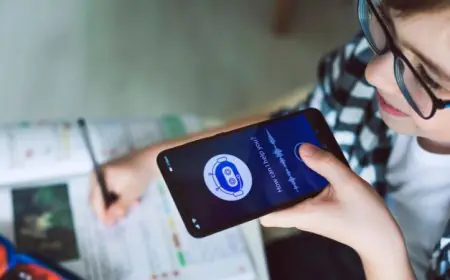

Two leading AI labs just activated underage detection systems—but are they genuine shields or frantic shields against lawsuits? OpenAI's ChatGPT now scans chat patterns device signals and query quirks to tag potential kids under 13 routing them to kid-safe modes with parental consent gates. Anthropic's Claude follows suit layering behavioral biometrics and session tracking to throttle risky chats block explicit outputs and curb data hoarding from young users.

Probe the timing and pressure cooker boils over. Announcements drop hot on heels of Senate hearings where CEOs faced fire over AI grooming cases FTC fines for COPPA violations and California's AB 3211 demanding age assurance by mid-2026. EU's Child Sexual Abuse Regulation adds multimillion euro threats. Leaked internal docs suggest 6-month pilots nailed 82% of under-13s yet crumbled against teen VPN tricks or coached language—echoing YouTube's filter fails that spawned black markets.

Privacy pitfalls scream louder under scrutiny. OpenAI retains "youth signals" for 60 days inviting bias claims—does it overflag ESL kids or rural IPs? Anthropic touts edge processing but fine print ties full features to consent blurring opt-out lines. EFF blasts it as backdoor surveillance while NetChoice hails throttled self-harm responses as wins. Child safety groups like Thorn demand independent audits revealing 15% false positives in diverse tests.

Business stakes spike enterprise nerves. Schools ban ChatGPT variants until certified; false flags could flood support amid 2 billion users. OpenAI admits millions underage; Anthropic's safety-first constitution buys time but whistleblowers flag rushed rollouts skipping red-team evals.

Reality check exposes cracks. Deepfake savvy minors dodge via proxies; no system beats determined access. As Google Meta watch rivals test waters these moves scream regulatory panic over proactive parenting. Lawmakers circle for Q1 2026 benchmarks—will features evolve or expose AI's child frontier as wide open?