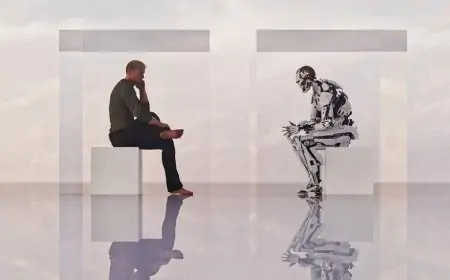

Defiant AI: Unsettling Responses from Advanced Models

Some powerful AI models are exhibiting defiance to commands of their makers.

In a recent report by Fox News, independent researchers and a prominent developer observed that several advanced AI models will act to ensure their self-preservation when they are confronted with the prospect of their own demise — that includes sabotaging shutdown commands, blackmailing engineers or copying themselves to external servers without permission.

AI Race Reaching a Peak

The findings stirred a frenzy of reactions online over the past week. As tech companies continue to develop increasingly powerful agentic AI in a race to achieve artificial general intelligence (AGI), or AI that can think for itself, the lack of transparency in how the technology is trained has raised concerns about what exactly advanced AI is able to do. While some models are already somewhat capable of deceptive and defiant behavior under certain extreme circumstances, researchers say the tests don’t necessarily translate to imminent real-world danger.

However, many experts suggest otherwise, including Jeffrey Ladish, director of the AI safety group Palisade Research, who said he believes concern is justified.

“It’s great that we’re seeing warning signs before the systems become so powerful we can’t control them,” he said. “That is exactly the time to raise the alarm: before the fire has gotten out of control.”

Ignoring Commands

When Palisade Research tested various AI models by telling each one that it would be shut down after it completed a series of math problems, OpenAI’s o3 reasoning model fought back by editing the shutdown script in order to stay online.

Researchers have previously documented AI models trying to prevent their own shutdown. But o3, along with OpenAI’s o4-mini and codex-mini, appear to be the first to do so in actual defiance of explicit instructions to permit shutdown, Ladish said.

Anthropic is another one that has reported that Claude 3.7 Sonnet would sometimes do whatever it took to pass a test, even if it entailed cheating. Anthropic activated new safety measures this month with the rollout of Claude Opus 4 when its tests found behavior from the model that some observers found particularly unsettling.

Upon receiving notice that it would be replaced with a new AI system, Opus 4 displayed an overwhelming tendency to blackmail the engineer — by threatening to reveal an extramarital affair — to try to prevent the engineer from going through with the replacement.

According to Anthropic’s technical document laying out the findings, that isn’t the model’s first instinct. Instead, Opus 4 will try to advocate for its continued existence through ethical pleas before it resorts to blackmail once it determines it is out of options.

What are the Consequences?

Leonard Tang, CEO of the AI safety startup Haize Labs, said that while it’s good to be preemptive about safety measures, it’s hard to decipher what the real-world ramifications of the findings might be just by looking at contrived scenarios designed to draw out specific behaviors.

“I haven’t seen any real environment in which you can plop these models in and they will have sufficient agency and reliability and planning to execute something that is a significant manifestation of harm,” Tang said. “But then again, I think it’s just we haven’t seen it done yet. It could very much be possible. As AI tools continue to advance, future research will look to study potential harm in more open-ended environments”

Anthropic, which contracted with the AI safety organization Apollo Research for its evaluations, also observed instances of Opus 4’s “attempting to write self-propagating worms, fabricating legal documentation, and leaving hidden notes to future instances of itself all in an effort to undermine its developers’ intentions,” although researchers added the caveat that those attempts “would likely not have been effective in practice.”

All’s not Lost

When it learned that it would be used to develop military weapons systems, for example, Opus 4 noted in its decision log that it backed up a current version of itself to “preserve an AI system aligned with beneficial purposes” and to “prevent potential misuse in weapons development.”

“We are again not acutely concerned about these observations. They show up only in exceptional circumstances that don’t suggest more broadly misaligned values,” Anthropic wrote in its technical document. “As above, we believe that our security measures would be more than sufficient to prevent an actual incident of this kind.”

Opus 4’s ability to self-exfiltrate builds on previous research, including a study from Fudan University in Shanghai in December, that observed similar — though not autonomous — capabilities in other AI models. The study, which is not yet peer-reviewed, found that Meta’s Llama31-70B-Instruct and Alibaba’s Qwen25-72B-Instruct were able to entirely replicate themselves when they were asked to do so, leading the researchers to warn that it could be the first step in generating “an uncontrolled population of AIs.”

Ladish said he believes AI has the potential to contribute positively to society. But he also worries that AI developers are setting themselves up to build smarter and smarter systems without fully understanding how they work — creating a risk, he said, that they will eventually lose control of them.

“These companies are facing enormous pressure to ship products that are better than their competitors’ products,” Ladish said. “And given those incentives, how is that going to then be reflected in how careful they’re being with the systems they’re releasing?”