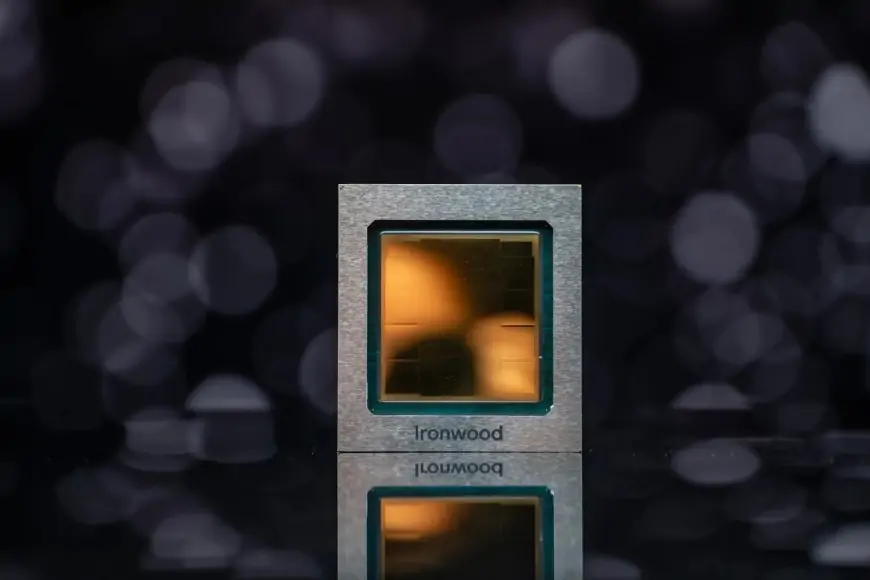

Google unveils Ironwood AI chip to redefine performance and efficiency

Google introduces Ironwood, its seventh-generation TPU, optimized for inference tasks. Delivering 42.5 exaflops of power, it is 24x faster than the world’s top supercomputer, marking a leap in AI acceleration and efficiency.

Google has unveiled Ironwood, its seventh-generation Tensor Processing Unit (TPU), at the Cloud Next 2025 conference. This cutting-edge AI accelerator chip is specifically designed to handle inference tasks—the stage where trained AI models make predictions or generate responses. Ironwood represents a significant leap in computational power and efficiency, positioning Google as a leader in the rapidly evolving AI hardware market.

Ironwood delivers groundbreaking performance metrics. Each chip boasts a peak compute power of 4,614 teraflops and features 192GB of high-bandwidth memory (HBM) with speeds of up to 7.4 terabytes per second. When scaled to its maximum configuration of 9,216 chips in a pod, it achieves an astonishing 42.5 exaflops—24 times the computational capacity of El Capitan, the world’s fastest supercomputer. This makes Ironwood a game-changer for large-scale AI workloads.

The chip’s architecture is purpose-built for the "age of inference," where AI systems proactively retrieve and generate data to deliver insights rather than merely processing raw information. Its specialized SparseCore accelerates ranking and recommendation workloads, making it ideal for applications like personalized shopping suggestions or real-time financial analysis. Additionally, Ironwood minimizes data movement and latency on-chip, reducing power consumption while maintaining high performance.

Ironwood will be integrated into Google Cloud's AI Hypercomputer infrastructure later this year. This modular computing platform powers advanced generative AI models like Google’s Gemini and supports massive parallel processing through its Pathways runtime software. Customers will have access to two configurations: a smaller 256-chip cluster or a full-scale 9,216-chip pod.

The launch of Ironwood comes amid fierce competition in the AI hardware space. Rivals like Nvidia dominate with their GPUs, while Amazon and Microsoft are advancing their proprietary chips such as Trainium and Cobalt 100. However, Google’s focus on inference optimization and energy efficiency sets Ironwood apart as a unique offering tailored for next-generation AI applications.

With twice the performance per watt compared to its predecessor Trillium and six times the memory capacity, Ironwood underscores Google’s commitment to pushing the boundaries of AI innovation. As the demand for generative AI continues to grow, this new TPU is poised to play a pivotal role in shaping the future of machine learning and cloud computing.